Word Vector

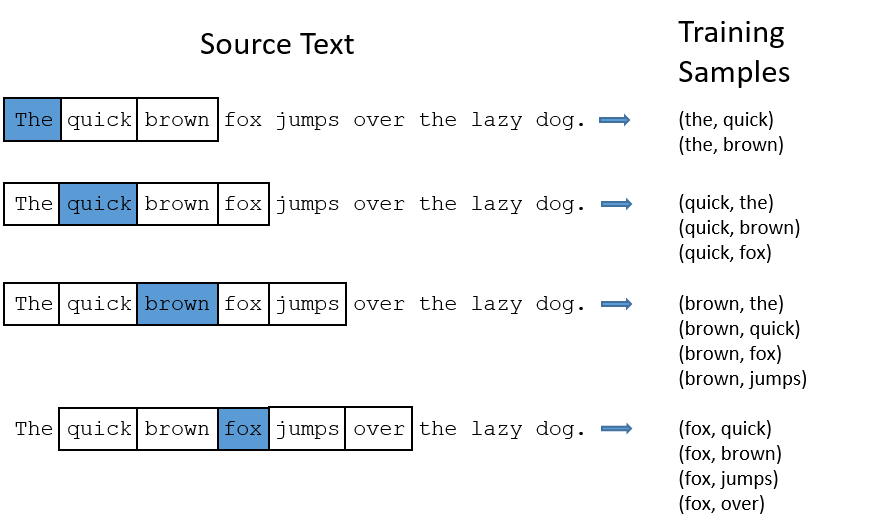

Skip gram model

The main idea behind the skip-gram model is this: it takes every words in a large corpora and also takes one-by-one the words that surround it within a defined ‘windows’ to then feed a neural network that after training will predict the probability for each word to actually appear in the window around the focus word.

Enjoy Reading This Article?

Here are some more articles you might like to read next: