RNN Entry

If we could connect previous information to the present task, it’s obviously helpful. So the LSTM(Long Short Term Memory networks) developed. But in some traditional methods long-term dependency meet a lot of difficulties, and LSTMs are explicitly designed to avoid the long-term dependency problem.

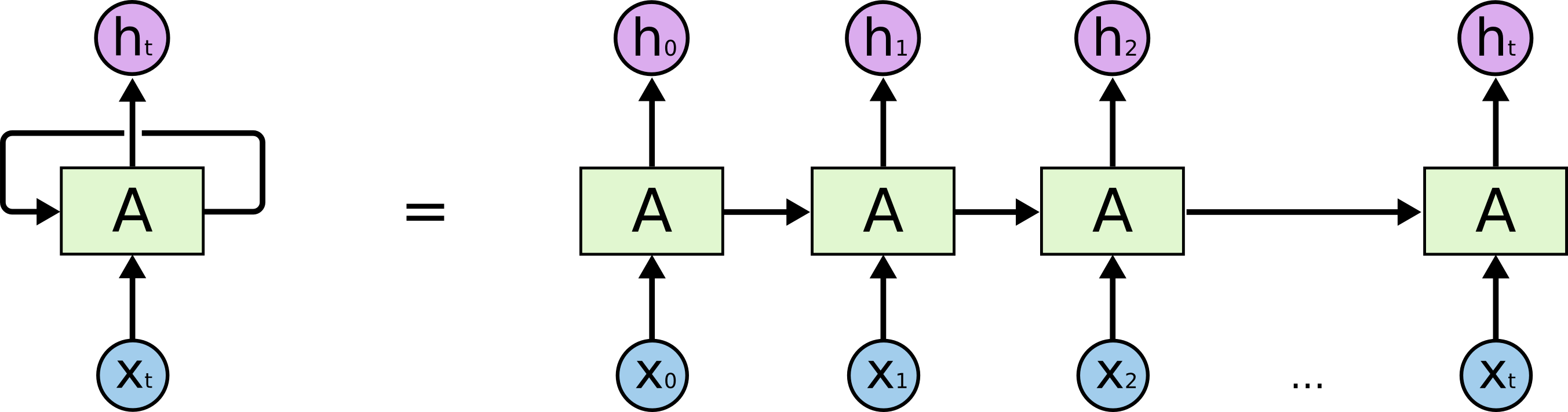

All recurrent neural networks have the form of a chain of repeating modules of neural network. And this kind of modules can be designed into a lot of structures.

这个世界上有很多隐秘而伟大的现象,过去我们可以通过初春的嫩芽,新出现的燕子发现这种惊奇,但是随着认识的逐渐增进,这些发现都藏到复杂精妙的公式逻辑背后了,我们不断思考和探索,一旦有所发现还是会像我们第一次看到下雪,第一次吃雪糕那样惊奇和欣喜的。

Enjoy Reading This Article?

Here are some more articles you might like to read next: